The Joy of Not Coding (Part 2): When AI Helps, When it Hurts, What to Watch For

When I ended Part 1, I was ready to continue using AI code assistants but this time as a teammate. The next experiment was simple: build an internal onboarding tool that creates a hierarchy of entities for a customer. A small Spring Boot CLI application that would hit a few internal endpoints and automate the dull setup tasks that most engineers would spend more time moaning about than building. So it fell to me: a perfectly scoped, perfectly boring task, safe to build with an AI assistant who won't complain.

Small, incremental steps

With the lessons learned from my last big bang approach, I broke it down:

- Application scaffolding

- One endpoint

- One onboarding plan YML

- Sensible tests

- Review

- Run and test

- Repeat

This sort of boilerplate went surprisingly smoothly. Within an hour, I could successfully onboard the root entity. The report produced at the end of the run was genuinely user-friendly, and the AI even suggested a dry-run option, which turned out to be helpful.

Adding the second and third level endpoints was smooth enough that I started to believe I’d finish this tool quickly.

Test struggles

Even though I was explicit about test quality, quantity, and when to run them, the assistant drifted into a familiar pattern:

- Update a feature → test suite explosion

- Claim that the feature was complete → broken tests elsewhere

- Fix one test → break others

- Throw up hands in despair → rewrite functionality and rewrite tests

Slowly, I became less reassured. The design was no longer aligned with my mental picture. The code looked different every time I saw it. It didn’t feel like my application anymore.

But I persisted. Just two endpoints to go.

All good!

My luck was about to run out. The code was written. The tests passed. Time to onboard all the data for a new customer.

The app reported success, little bright green checkmarks everywhere. The sequence looked correct. Except the last two entities were missing in the database.

Asking the assistant to fix it was a waste of time; it was more confused than I was. After digging through coding styles I haven’t seen in years (think deeply nested for loops), I discovered that an earlier entity had failed due to a mistake in my data. Despite an impressive number of ugly try/catch blocks, the error had been swallowed and success reported anyway.

My AI teammate found this very difficult to fix. After several rounds of tests breaking, passing, and breaking again, it finally reported the error correctly, but by then, the code was structured so differently I barely recognised it. It felt like a living thing that changed shape every time I looked away.

The last failure was spectacular. The flow was supposed to:

- Read the config

- Read the data

- Call endpoint

- Report success/failure

And it did, according to its own output.

"All entities onboarded successfully."

Except they weren't. The vital final entity wasn't created because there was no code to actually invoke the endpoint. All the surrounding fluff was executed- but the endpoint was never called.

It's working(ish)!

At the end of this, I did have an onboarding tool. I don’t particularly want to touch it again, but it’s a tedious task ticked off the list. It’s not customer-facing, and the README very clearly warns people about who wrote the code 😄

The problem is, only AI can extend it or fix this warped structure now:

- Convoluted exception handling

- Nested loops with nested conditionals

- Code that is never stable or predictable to read: it constantly changes

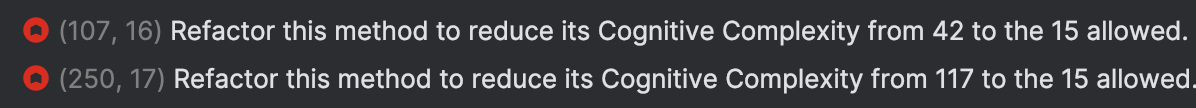

For kicks, I ran it through Sonarqube. The first is an example of the impossibly deep nesting:

And then, the complexity:

What matters for engineering leaders

Use AI to automate the boring parts of life.

Yes, you may be stuck with the code if you need to maintain or extend it, but for internal utilities, this tradeoff is often worth it. You stay focused on solving real problems and shipping value, and you offload the grunt work.

But this only works if your team can properly assess risk. If risk-management skills are weak, you need to coach and strengthen them, more than ever.

AI ships code quickly but tests are no longer an automatic safety net

The responsibility for architecture, design, and code shape still lies with humans. AI accelerates construction, but it does not carry accountability.

“Everything passed” doesn’t mean what it used to.

Review the code occasionally. Check if it still resembles something coherent. Watch for smells:

- Constant rewrites

- Bloated test suites

- Bugs that reveal misunderstanding of functionality

Understand what you're gaining

Pay attention to how your team uses AI coding assistants. Be curious about what they’re actually delivering.

- Are you shipping faster while keeping quality high?

- Are releases carrying more value?

- Are you improving productivity and efficiency and can you articulate how?

If not, your team may simply be enamoured with the latest gadget while quietly accumulating debt and cost.

Celebrate the success stories. Use them as reference points for the sceptical or cautious.

AI amplifies your engineering culture

For better or for worse, if your standards are vague or poor, your codebase will get more vague and poorer.

If your team is disciplined, AI becomes a multiplier.

As a leader, be deliberate about setting guardrails and make tough calls about what AI should and shouldn’t touch.

Make sure your team can quickly identify issues and assess whether a hotfix is reliable or a landmine. If your team depends heavily on AI to hotfix and you have mission-critical software with uptime or support SLAs, that’s a red flag.

Ownership must live somewhere

And it’s not with the AI.

If you hear lines like “I’m not sure, <AI assistant> wrote it,” you’ve lost control of the system.

Ownership must stay with humans who understand:

- the intent

- the constraints

- and the future purpose of the system

Conclusion

AI was great at removing toil and I'm happy to have this onboarding tool. And AI assistants will get better over time so it's not all doom and gloom.

But right now, the parts that require judgement, direction, design, and accountability still belong to us.

And maybe that’s the real joy of not coding: choosing where your attention actually matters, and letting the rest be automated, with eyes wide open.

Recommended sound track: Master of Puppets (Metallica)